by John Durham Peters

The essay begins:

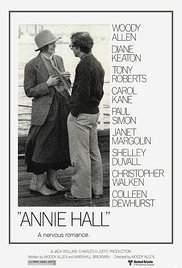

In Woody Allen’s romantic comedy Annie Hall (1977), the world’s most famous technological determinist had a brief cameo that in some circles is as well-known as the movie itself. Woody Allen, waiting with Diane Keaton in a slow-moving movie ticket line, pulls Marshall McLuhan from the woodwork to rebuke the blowhard in front of them, who is pontificating to his female companion about McLuhan’s ideas. McLuhan, as it happened, was not an easy actor to work with: even when playing a parody of himself, a role he had been practicing full-time for years, he couldn’t remember his lines, and when he could remember them, he couldn’t deliver them. In the final take (after more than fifteen tries), McLuhan tells the mansplainer, “I heard what you were saying. You, you know nothing of my work. You mean my whole fallacy is wrong. How you ever got to teach a course in anything is totally amazing.” In the film, the ability to call down ex cathedra authorities at will to silence annoying know-it-alls is treated as the ultimate in wish fulfillment as Allen says to the camera, “Boy, if life were only like this!” Rather than a knockout punch, however, McLuhan tells the man off with something that sounds like a Zen koan, an obscure private joke, or a Groucho Marx non sequitur. There is more going on here than a simple triumph over someone else’s intellectual error. Isn’t a fallacy always self-evidently wrong?

In Woody Allen’s romantic comedy Annie Hall (1977), the world’s most famous technological determinist had a brief cameo that in some circles is as well-known as the movie itself. Woody Allen, waiting with Diane Keaton in a slow-moving movie ticket line, pulls Marshall McLuhan from the woodwork to rebuke the blowhard in front of them, who is pontificating to his female companion about McLuhan’s ideas. McLuhan, as it happened, was not an easy actor to work with: even when playing a parody of himself, a role he had been practicing full-time for years, he couldn’t remember his lines, and when he could remember them, he couldn’t deliver them. In the final take (after more than fifteen tries), McLuhan tells the mansplainer, “I heard what you were saying. You, you know nothing of my work. You mean my whole fallacy is wrong. How you ever got to teach a course in anything is totally amazing.” In the film, the ability to call down ex cathedra authorities at will to silence annoying know-it-alls is treated as the ultimate in wish fulfillment as Allen says to the camera, “Boy, if life were only like this!” Rather than a knockout punch, however, McLuhan tells the man off with something that sounds like a Zen koan, an obscure private joke, or a Groucho Marx non sequitur. There is more going on here than a simple triumph over someone else’s intellectual error. Isn’t a fallacy always self-evidently wrong?

That a fallacy might not necessarily be wrong is the question I take up in this essay. Whatever technological determinism is, it is one of a family of pejoratives by which academics reprove their fellows for single-minded devotion (or monomaniacal fanaticism) to their pet cause. At least since “sophist” was launched as a slur in ancient Greece, it has been a regular sport to contrive doctrines that nobody believes and attribute them to one’s enemies. Terms ending with –ism serve this purpose particularly well. As Robert Proctor notes in an amusing and amply documented survey of academic nomenclature, “‘Bias’ and ‘distortion’ are perennial terms of derision from the center, and the authors of such slants are often accused of having fallen into the grip of some blinding ‘-ism.’” Often these -isms, he continues, “are things no one will openly claim to support: terrorism, dogmatism, nihilism, and so on.” (Racism and sexism are even better examples.) Terms ending with –ism, such as economism, fetishism, formalism, physicalism, positivism, and scientism, often stand for “zealotry or imprudence in the realm of method,” with reductionism standing for the whole lot. Corresponding nouns ending with –ist designate those people accused of harboring such doctrines—reductionist, fetishist, formalist—though –ist is a tricky particle. Artist, economist, psychologist, and above all, scientist have positive valences; artistic is a term of praise, but scientistic suggests being in the grip of an ideology. (It might be bad to be a positivist, but Trotskyist is strongly preferred to Trotskyite; it would be a big job to fully describe the whimsical behavior of the –ism clan, tasked as it is with policing ultrafine differences.) Pathologies such as logocentrism, phallogocentrism, and heteronormativity are often diagnosed in people who do not realize they are carriers.

Technological determinism belongs to this family of conceptual maladies thought unknown to their hosts but discernible by a savvy observer. It is one of a long line and large lexicon of academic insults and prohibitions. As old as academic inquiry is the conviction of the blindness of one’s fellow inquirers. From listening to the ways scholars talk about each other, you would not think they were a collection of unusually brainy people but rather a tribe uniquely susceptible to folly and stupidity. The cataloging of fallacies has been motivated by a desire to regulate (or mock) the thinking of the learned as much as of the crowd. The academy has been fertile soil for satirists from the ancient comic playwrights to Erasmus and Rabelais, from Swift and the Encyclopédie to Nietzsche to the postwar campus novel. Whatever else Renaissance humanism was, it was a critique of scholarly vices, and Erasmus’s Praise of Folly is, among other things, a compendium of still relevant witticisms about erudite errors. There are as many fallacies as chess openings, and the names of both index exotic, often long-forgotten historical situations. We shouldn’t miss the element of satire and parody in such cartoonish names as the red herring, bandwagon, card-stacking, and cherry-picking fallacies. “The Texas sharpshooter fallacy” is drawing the target after you have taken the shots. The “Barnum effect” describes the mistake of taking a trivially general statement as uniquely significant (as in fortune cookies or astrology readings). The study of fallacies gives you a comic, sometimes absurdist glance at the varieties of cognitive tomfoolery.

One reason why academic life is the native soil for the detection of fallacies is the great profit that can be wrung from strategic blindness. Looking at the world’s complexity through a single variable—air, fire, water, God, ideas, money, sex, genes, media—can be immensely illuminating. (Granting agencies smile on new “paradigms.”) A key move in the repertoire of academic truth games is noise-reduction. John Stuart Mill once noted of “one-eyed men” that “almost all rich veins of original and striking speculation have been opened by systematic half-thinkers.” A less single-minded Marx or Freud would not have been Marx or Freud. Intellectuals can be richly rewarded for their cultivated contortions.

But one man’s insight is another man’s blindness. The one-eyed gambit invites the counterattack of showing what has gone unseen, especially as prophetic vision hardens into priestly formula. Nothing quite whets the academic appetite like the opportunity to prove what dullards one’s fellows are, and, for good and ill, there never seems to be any shortage of material. (We all know people who think they can score points during Q&A sessions by asking why their favorite topic was not “mentioned.” Someone should invent a fallacy to name that practice.) At some point every scholar has felt the itch to clear the ground of previous conceptions and ill-founded methods; this is partly what “literature review” sections are supposed to do. (The history of the study of logic is littered with the remains of other people’s attempted cleanup jobs.) Scholars love to upbraid each other for being trapped by the spell of some nefarious influence. How great the pleasure in showing the folly of someone’s –istic ways! The annals of academic lore are full of tales of definitive takedowns and legendary mic drops, and social media platforms such as Facebook provide only the most recent germ culture for the viral spread of delicious exposés of the ignorant (as often political as academic). This is one reason McLuhan’s Annie Hall cameo continues to have such resonance: it is the archetype of a decisive unmasking of another scholar’s fraudulence or ignorance.

But it is also a classic fallacy: the appeal to authority. Who says McLuhan is the best explicator of his own ideas? As he liked to quip: “My work is very difficult: I don’t pretend to understand it myself.” You actually get a better sense of what McLuhan wrote from the blowhard, however charmlessly presented, than from McLuhan. The disagreeable truth is that what the man is doing isn’t really that awful or that unusual: it is standard academic behavior in the classroom at least, if not the movie line. Laughing at someone teaching a course on “TV, media, and culture” is, for many of us, not to recognize ourselves in the mirror. The fact that so many academics love the Annie Hall put-down is one more piece of evidence showing our vulnerability to fallacious modes of persuasion. Why should we delight in the silencing of a scholar by the gnomic utterances of a made-for-TV magus? Since when is silencing an academic value? And by someone who doesn’t really make any sense?

Silencing is one thing that the charge of technological determinism, like many other so-called fallacies, does. Fallacies need to be understood within economies and ecologies of academic exchange. They are not simply logical missteps. To accuse another of a fallacy is a speech act, a communicative transaction. The real danger of technological determinism may be its labeling as a fallacy. The accusation, writes Geoffrey Winthrop-Young, “frequently contains a whiff of moral indignation. To label someone a technodeterminist is a bit like saying that he enjoys strangling cute puppies; the depraved wickedness of the action renders further discussion unnecessary.” The threat of technological determinism, according to Wolf Kittler, “goes around like a curse frightening students.” The charge can conjure up a kind of instant consensus about what right-minded people would obviously avoid. The charge of technological determinism partakes of a kind of “filter bubble” logic of unexamined agreement that it’s either machines or people. Jill Lepore recently put it with some ferocity: “It’s a pernicious fallacy. To believe that change is driven by technology, when technology is driven by humans, renders force and power invisible.”

There are undeniably many vices and exaggerations around the concept of technology. But my overarching concern here is not to block the road of inquiry. (No-go zones often have the richest soil.) In a moment when the meaning of technics is indisputably one of the most essential questions facing our species, do we really want to make it an intellectual misdemeanor to ask big questions about “technology” and its historical role, however ill-defined the category is? What kinds of inquiry snap shut if we let the specter of technological determinism intimidate us? The abuse does not ruin the use. The question is particularly pointed for my home field of media studies, whose task is to show that form, delivery, and control, as well as storage, transmission, and processing, all matter profoundly. If explanations attentive to the shaping role of technological mediation are ruled out, the raison d’être of the field is jeopardized. It is so easy to sit at our Intel-powered computers and type our latest critique of technological determinism into Microsoft Word files while Googling facts and checking quotes online. We are so busy batting away the gnats of scholarly scruples to notice that we have swallowed a camel. Continue reading free of charge …

This essay, a contribution to the special issue “Fallacies,” offers both a genealogy of the concept of technological determinism and a metacritique of the ways academic accusations of fallaciousness risk stopping difficult but essential kinds of inquiry. To call someone a technological determinist is to claim all the moral force on your side without answering the question of what we are to do with these devices that infest our lives.

JOHN DURHAM PETERS is María Rosa Menocal Professor of English and Film and Media Studies at Yale and author of The Marvelous Clouds: Toward a Philosophy of Elemental Media (2015).

JOHN DURHAM PETERS is María Rosa Menocal Professor of English and Film and Media Studies at Yale and author of The Marvelous Clouds: Toward a Philosophy of Elemental Media (2015).

This essay by D. Vance Smith locates the beginning of philosophy in Aristotelian syllogistic logic, where fallacy is the precondition of rationality. Smith then turns to medieval commentaries, which treat fallacy as a nonreferential discourse and develop what is essentially a theorization of fictionality and its practices.

This essay by D. Vance Smith locates the beginning of philosophy in Aristotelian syllogistic logic, where fallacy is the precondition of rationality. Smith then turns to medieval commentaries, which treat fallacy as a nonreferential discourse and develop what is essentially a theorization of fictionality and its practices.

In Woody Allen’s romantic comedy Annie Hall (1977), the world’s most famous technological determinist had a brief cameo that in some circles is as well-known as the movie itself. Woody Allen, waiting with Diane Keaton in a slow-moving movie ticket line, pulls Marshall McLuhan from the woodwork to rebuke the blowhard in front of them, who is pontificating to his female companion about McLuhan’s ideas. McLuhan, as it happened, was not an easy actor to work with: even when playing a parody of himself, a role he had been practicing full-time for years, he couldn’t remember his lines, and when he could remember them, he couldn’t deliver them. In the final take (after more than fifteen tries), McLuhan tells the mansplainer, “I heard what you were saying. You, you know nothing of my work. You mean my whole fallacy is wrong. How you ever got to teach a course in anything is totally amazing.” In the film, the ability to call down ex cathedra authorities at will to silence annoying know-it-alls is treated as the ultimate in wish fulfillment as Allen says to the camera, “Boy, if life were only like this!” Rather than a knockout punch, however, McLuhan tells the man off with something that sounds like a Zen koan, an obscure private joke, or a Groucho Marx non sequitur. There is more going on here than a simple triumph over someone else’s intellectual error. Isn’t a fallacy always self-evidently wrong?

In Woody Allen’s romantic comedy Annie Hall (1977), the world’s most famous technological determinist had a brief cameo that in some circles is as well-known as the movie itself. Woody Allen, waiting with Diane Keaton in a slow-moving movie ticket line, pulls Marshall McLuhan from the woodwork to rebuke the blowhard in front of them, who is pontificating to his female companion about McLuhan’s ideas. McLuhan, as it happened, was not an easy actor to work with: even when playing a parody of himself, a role he had been practicing full-time for years, he couldn’t remember his lines, and when he could remember them, he couldn’t deliver them. In the final take (after more than fifteen tries), McLuhan tells the mansplainer, “I heard what you were saying. You, you know nothing of my work. You mean my whole fallacy is wrong. How you ever got to teach a course in anything is totally amazing.” In the film, the ability to call down ex cathedra authorities at will to silence annoying know-it-alls is treated as the ultimate in wish fulfillment as Allen says to the camera, “Boy, if life were only like this!” Rather than a knockout punch, however, McLuhan tells the man off with something that sounds like a Zen koan, an obscure private joke, or a Groucho Marx non sequitur. There is more going on here than a simple triumph over someone else’s intellectual error. Isn’t a fallacy always self-evidently wrong? JOHN DURHAM PETERS

JOHN DURHAM PETERS